Zeshan M. Hussain

MD-PhD in HST. MD Candidate at Harvard Medical School. PhD in EECS from MIT.

📍Cambridge, MA

Hello! I am an MD-PhD student in the Harvard-MIT Health, Sciences, and Technology (HST) program. I recently completed my PhD in Computer Science at MIT, working with David Sontag in the Clinical ML group.

My research broadly deals with developing machine learning methods to assist and improve clinical decision-making in healthcare settings, with the ultimate goal of deploying robust and scalable clinical decision support systems. I am specifically interested in deep generative models of clinical sequential data, causal inference, and physician-AI interaction. See below for further details.

Previously, I completed by B.S. and M.S. in Computer Science from Stanford University. I worked on deep learning for medical imaging and data augmentation methods with Daniel Rubin and Chris Re.

Research

My work involves building out the machine learning and statistical methods forming the machinery for future clinical decision support systems that will be used to manage patients with chronic health conditions. During my PhD, I focused on precision oncology, but these ideas extend naturally to any disease process where there is uncertainty in therapeutic management as well as uncertainty in how patients respond to therapy.

Thus far, I have pursued research along several themes:

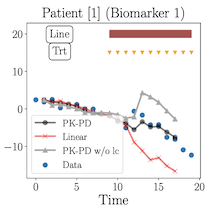

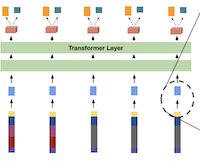

- How will my patient respond holistically to a chosen therapeutic regimen? – Oncologists, and physicians more generally, approach choosing a treatment for their patients multifactorially, e.g. maximizing patient survival, minimizing adverse events, improving quality of life, etc. I have looked at building predictive models of clinical temporal data both focused on particular tasks (e.g. biomarker forecasting, as in [ICML 2021]) and also general models that provide multitask predictions to enable holistic management [npj Digital Medicine].

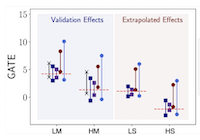

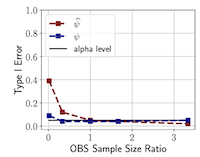

- How can I ‘‘sanity check’’ predictive and causal estimates produced by ML models for my patients? Additional context for predictions from ML models for a new patient or a specific patient population can help a physician better assess the reliability of the output. One approach for providing this context is through uncertainty quantification, where we use techniques from conformal inference to give valid confidence intervals of ML model predictions [AISTATS 2023]. Another is by using experimental data as a means of assessing reliability of causal estimates inferred from observational data [NeurIPS 2022].

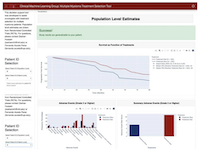

- How will AI-based clinical decision support systems impact physician behavior? I studied physician-AI interaction in clinical oncology, where I built a prototype of a clinical decision support system. With it, I ran user studies to assess how physicians interact with simulated versions of the above methods and how their decision-making was impacted [Preprint].

News

| Oct 03, 2023 | Talk at Stanford MedAI Series – Benchmarking Causal Effects from Observational Studies using Experimental Data |

|---|---|

| Jun 12, 2023 | Successfully defended PhD thesis. |

Selected Publications

-

Under Review

Evaluating Physician-AI Interaction for Cancer Management: Paving the Path towards Precision OncologyarXiv preprint arXiv:2404.15187, 2024

Evaluating Physician-AI Interaction for Cancer Management: Paving the Path towards Precision OncologyarXiv preprint arXiv:2404.15187, 2024